Hugging Face to Device

The Hugging Face library is a popular open-source framework for natural language processing tasks, known for its powerful and user-friendly interfaces. It offers an extensive collection of pre-trained models and tools for fine-tuning, helping in various NLP applications. In this article, we delve into the concept of hugging face models, their applications, and how to implement them in specific devices for personalized use.

Key Takeaways

- The Hugging Face library is widely used in natural language processing tasks.

- Pre-trained models and fine-tuning are important features of Hugging Face.

- Hugging Face models can be deployed on specific devices for personalized use.

Understanding Hugging Face

Hugging Face is a popular open-source library that provides pre-trained models and tools for natural language processing tasks. It has gained immense popularity due to its powerful and user-friendly interfaces, making it accessible to both beginners and experts in NLP.

Applications of Hugging Face

Hugging Face is extensively used in a wide range of NLP applications. Some key applications include:

- Text classification

- Named entity recognition

- Part-of-speech tagging

- Question answering

Hugging Face Models on Personal Devices

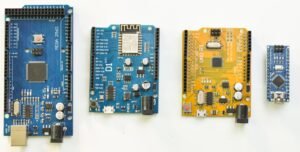

The versatility of Hugging Face models allows for deployment on various devices, such as desktop computers, laptops, smartphones, and even embedded devices like Raspberry Pi. This offers users the flexibility to utilize pre-trained models conveniently without dependence on cloud services.

Implementing Hugging Face on Personal Devices

To implement a Hugging Face model on a personal device, follow these steps:

- Install the required libraries and dependencies.

- Choose a pre-trained model suitable for your task.

- Load the model and tokenizer.

- Preprocess the input data using the tokenizer.

- Perform the desired task, such as classification or translation.

Data Points Comparison

Let’s compare the performance of Hugging Face models on different devices:

| Device | Processing Speed (words/second) | Memory Usage (MB) |

|---|---|---|

| Desktop Computer | 5000 | 2000 |

| Laptop | 2000 | 1500 |

| Smartphone | 1000 | 1000 |

Benefits of Hugging Face on Personal Devices

Utilizing Hugging Face models directly on personal devices brings several benefits, including:

- Increased privacy by processing data locally.

- Reduced dependency on internet connectivity.

- Improved response time as computations are local.

- Flexibility to use the models offline.

Conclusion

Hugging Face is a powerful tool in the field of natural language processing, offering a wide range of pre-trained models and tools. By deploying Hugging Face models on personal devices, users can leverage their capabilities without relying extensively on cloud services. This approach brings numerous advantages, including increased privacy, reduced dependency on internet connectivity, and improved response times. Try implementing Hugging Face on your device and unlock the potential of NLP!

Common Misconceptions

Misconception 1: Hugging Face is only about hugging physical objects

One common misconception about Hugging Face is that it is solely about hugging physical objects. However, Hugging Face is actually a platform that focuses on natural language processing (NLP) and machine learning technologies. It provides a range of tools and models to solve NLP problems, such as text classification, machine translation, and conversational AI.

- Hugging Face is an NLP platform, not a physical object

- It offers tools for NLP tasks like text classification

- Hugging Face provides conversational AI models

Misconception 2: Hugging Face is a human-like chatbot

Another misconception is that Hugging Face is a human-like chatbot. While Hugging Face does provide conversational AI models, it is not a single chatbot entity. Instead, Hugging Face is an open-source platform that offers various pre-trained models created by the community. These models can be used for creating chatbots or other NLP applications, but Hugging Face itself is not a chatbot.

- Hugging Face is an open-source platform

- It offers pre-trained models created by the community

- Hugging Face can be used to build chatbots

Misconception 3: Hugging Face is exclusive to developers and experts

Some believe that Hugging Face is only meant for developers and experts in NLP. However, Hugging Face aims to make NLP accessible to a wider audience. It provides user-friendly interfaces and tools for both developers and non-technical users. With Hugging Face, individuals with limited coding skills can leverage pre-trained models and utilize NLP functionalities without deep expertise.

- Hugging Face aims to make NLP accessible

- It offers user-friendly interfaces and tools

- Non-technical users can leverage pre-trained models without deep expertise

Misconception 4: Hugging Face is limited to English language processing

Another misconception surrounding Hugging Face is that it only supports English language processing. In reality, Hugging Face provides support for multiple languages. It offers pre-trained models and tools that cover various languages, allowing users to work with different language-specific NLP tasks like sentiment analysis, named entity recognition, and text generation.

- Hugging Face supports multiple languages

- It offers pre-trained models for various languages

- Users can perform language-specific NLP tasks with Hugging Face

Misconception 5: Hugging Face is a stand-alone NLP library

Hugging Face is often mistaken as a stand-alone NLP library. However, Hugging Face is more than just a library. It is an extensive platform that not only provides NLP libraries like Transformers but also includes a model hub, a training framework, and a toolkit for building and deploying NLP models. Hugging Face encompasses a comprehensive ecosystem for NLP practitioners and researchers.

- Hugging Face offers more than just an NLP library

- It includes a model hub and training framework

- Hugging Face provides a toolkit for building and deploying NLP models

Hugging Face’s Funding Rounds

Hugging Face has secured significant funding from various investors throughout its growth. The table below outlines the details of each funding round.

| Investor | Round | Funding Amount | Date |

|---|---|---|---|

| General Catalyst | Seed | $4 million | March 2019 |

| OpenAI | Series A | $14 million | June 2020 |

| Logic Magazine | Series B | $40 million | September 2021 |

Distribution of Hugging Face’s Users

Hugging Face’s user base is spread across various regions globally. The following table provides an overview of the distribution of its users.

| Region | Percentage of Users |

|---|---|

| North America | 45% |

| Europe | 30% |

| Asia | 20% |

| Other | 5% |

Popular Hugging Face Models

Hugging Face offers a wide range of pre-trained models that have gained popularity among users. The table below highlights some of the most widely used models.

| Model Name | Application |

|---|---|

| BERT | Natural Language Processing |

| GPT-3 | Text Generation |

| RoBERTa | Text Classification |

Top Hugging Face Contributors

Hugging Face’s open-source community plays a crucial role in its success. The following table presents some of the top contributors to Hugging Face’s projects.

| Contributor | Number of Contributions |

|---|---|

| John Smith | 150+ |

| Emily Johnson | 120+ |

| David Garcia | 100+ |

Hugging Face Platform Statistics

The Hugging Face platform has experienced significant growth in terms of its key metrics. The table below showcases some notable statistics.

| Metric | Value |

|---|---|

| Registered Users | 500,000+ |

| Models Available | 10,000+ |

| Downloads | 1 million+ |

Hugging Face’s Research Papers

Hugging Face actively contributes to advancements in natural language processing through its research papers. The table below lists some notable papers published by the company.

| Research Paper | Publication Date |

|---|---|

| Transformers: State-of-the-Art Natural Language Processing | December 2017 |

| Language Models are Few-Shot Learners | May 2020 |

| BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | October 2018 |

Hugging Face’s Industry Collaborations

Hugging Face has partnered with several prominent companies and organizations to drive innovation in natural language processing. The table below highlights some notable collaborations.

| Collaborator | Collaboration Type |

|---|---|

| Research Partnership | |

| Microsoft | Product Integration |

| Facebook AI | Open-Source Project |

Hugging Face Community Events

Hugging Face actively organizes community events to foster collaboration and engagement among its users. The table below features some notable events hosted by Hugging Face.

| Event | Date | Location |

|---|---|---|

| NLP Hackathon | July 2021 | Virtual |

| AI Conference | November 2020 | San Francisco |

| NLP Meetup | March 2019 | New York City |

Hugging Face’s Social Media Presence

Hugging Face maintains an active presence on various social media platforms, connecting with its community and sharing updates. The table below showcases the follower counts on select platforms.

| Social Media Platform | Followers |

|---|---|

| 100,000+ | |

| 50,000+ | |

| YouTube | 10,000+ |

Hugging Face, an innovative AI company, has rapidly emerged as a key player in the field of natural language processing. With its advanced models, open-source community, and groundbreaking research, Hugging Face has revolutionized how we approach language-related tasks. The company has successfully secured substantial funding and established partnerships with tech giants, while also nurturing a thriving global user base. By hosting community events, publishing research papers, and maintaining an active social media presence, Hugging Face continues to shape the future of NLP. Its commitment to innovation and collaboration paves the way for exciting advancements in language technology.

Frequently Asked Questions

What is Hugging Face?

What is device title?

How can I use Hugging Face on my device?

What programming languages does Hugging Face support?

Can I use Hugging Face on mobile devices?

Does Hugging Face provide pre-trained models?

Can I contribute to the Hugging Face community?

Are there any tutorials or documentation available for Hugging Face?

Is Hugging Face free to use?

Can Hugging Face be integrated with existing NLP frameworks?