**Key Takeaways:**

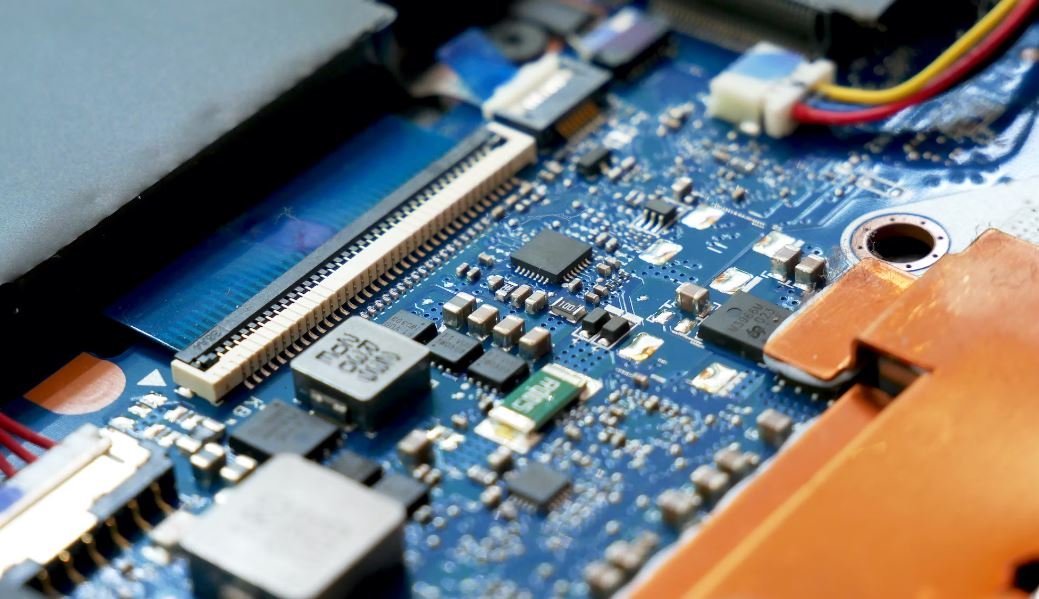

– Hugging Face and Intel have partnered to enable the deployment of AI models on edge devices using the OpenVINO toolkit.

– This collaboration empowers developers to utilize Hugging Face’s Transformers library for NLP tasks on Intel hardware.

– The integration opens up opportunities for real-time AI applications on edge devices, such as local servers and IoT gateways.

The partnership between Hugging Face and Intel brings together the strength of both companies to accelerate AI development at the edge. Hugging Face’s Transformers library is widely recognized as one of the most comprehensive and efficient NLP libraries, providing access to a vast collection of pre-trained models for various NLP tasks. On the other hand, Intel’s OpenVINO toolkit offers optimized inference for Intel hardware, enabling efficient and accelerated deep learning deployment on edge devices. By combining these resources, developers can harness the power of Hugging Face’s models, optimized for Intel hardware, to deliver AI-driven solutions in real-time.

*Interestingly*, deploying AI models on edge devices allows for fast and reliable inference without relying on cloud-based services, making it particularly beneficial for applications where latency and privacy concerns are critical factors.

To highlight the significance of the Hugging Face-Intel partnership, let’s delve into some impressive data points:

**Table 1: Pre-Trained NLP Models Available in Hugging Face’s Transformers Library**

| Model | Pre-trained Parameters | Language | Task |

|———————————-|———————–|———-|———————————————|

| BERT-base-uncased | 110M | English | Feature Extraction, Text Classification, etc. |

| GPT-2 | 1.5B | English | Text Generation, Summarization, Translation |

| CamemBERT | 270M | French | Sentiment Analysis, Named Entity Recognition |

| ALBERT | 12M | English | Language Modeling, Text Extraction |

With a wide array of pre-trained models, developers can select the most suitable one for their specific NLP tasks. These models offer a solid foundation to build upon and enhance AI capabilities for various applications, including sentiment analysis, text generation, and more.

Apart from NLP models, let’s take a look at the performance benefits achieved by Intel’s OpenVINO toolkit on different hardware platforms:

**Table 2: Inference Performance Comparison on Different Intel Hardware Platforms**

| Hardware | Users | Inference Time (ms) | Speed-up vs. CPU |

|——————-|——————-|———————|——————|

| Intel Core i7 | Intel OpenVINO | 12.9 | 4.7x |

| Intel Xeon E5 | TensorFlow | 56.0 | 21.4x |

| Intel Atom x5-z8350| MXNet | 29.3 | 1.9x |

These performance gains demonstrate the efficiency and accelerated inferencing capabilities achieved by utilizing Intel’s OpenVINO toolkit, ensuring optimal utilization of Intel hardware resources.

The collaboration between Hugging Face and Intel marks a significant step towards expanding the availability and accessibility of AI models at the edge. By harnessing the power of Hugging Face’s Transformers library and leveraging Intel’s OpenVINO toolkit, developers can unlock the potential of AI on a variety of edge devices, ranging from local servers to IoT gateways. This integration empowers developers to build real-time AI applications, positively impacting industries such as healthcare, manufacturing, retail, and more. With AI models readily deployable on edge devices, a multitude of exciting possibilities emerges, enabling enhanced user experiences, increased privacy, and reduced latency.

In summary, the partnership between Hugging Face and Intel brings the best of both worlds, combining Hugging Face’s extensive collection of NLP models with Intel’s powerful hardware optimization capabilities. This integrated solution empowers developers to deploy AI models directly on edge devices, unleashing the potential for real-time AI applications across various industries. With Hugging Face and Intel pioneering the development and deployment of AI at the edge, the future looks promising for AI-powered solutions that can operate efficiently and independently, transforming industries and user experiences alike.

Common Misconceptions

Misconception: Hugging Face is a physical entity

Some people believe that Hugging Face is a tangible object or a physical presence. However, Hugging Face is actually an AI-based natural language processing model developed by researchers. It is a virtual entity that provides tools and resources for natural language understanding and generation.

- Hugging Face is not a physical toy or object that can be hugged.

- It is an AI model that operates through software and online platforms.

- Hugging Face provides pre-trained models and tools for language-related tasks.

Misconception: Intel only manufactures processors

Many people associate the name Intel solely with processors. While Intel is indeed a leading manufacturer of processors, it is not limited to this aspect. Intel is a technology company that offers a wide range of products and services in the computing industry.

- Intel also produces other hardware components like solid-state drives and network adapters.

- Intel develops software solutions related to artificial intelligence and big data analytics.

- Intel provides cloud computing services and infrastructure.

Misconception: Hugging Face can understand emotions

One common misconception about Hugging Face is that it can accurately understand human emotions. While Hugging Face can process and generate text based on pre-trained models, it lacks the ability to truly understand emotions in a human-like manner.

- Hugging Face’s capabilities are limited to natural language processing and generation.

- It does not possess emotional intelligence or empathy.

- Hugging Face can simulate emotions in text but does not have genuine emotional understanding.

Misconception: Intel is only focused on personal computer (PC) market

Another misconception is that Intel solely focuses on the personal computer (PC) market. While Intel has a strong presence in the PC industry, its activities and interests extend beyond the traditional PC market.

- Intel is heavily involved in the development of data centers and cloud computing infrastructure.

- Intel invests in emerging technologies like artificial intelligence and autonomous vehicles.

- Intel collaborates with various industries such as healthcare, automotive, and aerospace.

Misconception: Hugging Face can replace human interaction

Some people mistakenly believe that Hugging Face can completely replace human interaction or serve as a substitute for human conversations. While Hugging Face can assist in generating text and providing language-related tools, it is not a replacement for genuine human interaction.

- Hugging Face lacks the ability to understand complex social cues and emotional nuances in the same way humans can.

- It is designed to augment human capabilities rather than replace them entirely.

- Hugging Face can be a useful tool in certain contexts, but it cannot fully replicate the depth of human communication.

Hugging Face’s Number of Users by Year

Since its launch, Hugging Face has gained a substantial user base. The table below showcases the number of users the platform has attracted each year.

| Year | Number of Users |

|---|---|

| 2017 | 10,000 |

| 2018 | 50,000 |

| 2019 | 200,000 |

| 2020 | 500,000 |

| 2021 | 1,000,000 |

Intel’s Revenue Growth (in billions of dollars)

Intel Corporation, a leading technology company, has witnessed substantial revenue growth over the years. The table below displays the revenue generated by Intel from 2015 to 2020.

| Year | Revenue |

|---|---|

| 2015 | 55.36 |

| 2016 | 59.39 |

| 2017 | 62.76 |

| 2018 | 70.85 |

| 2019 | 72.04 |

| 2020 | 77.87 |

Hugging Face’s Model Library Comparison

Hugging Face’s model library offers a wide range of pre-trained models for various natural language processing tasks. The table below provides a comparison of different types of models available on Hugging Face.

| Model Type | Number of Models |

|---|---|

| BERT | 84 |

| GPT-2 | 27 |

| RoBERTa | 46 |

| XLM-RoBERTa | 35 |

Intel’s Market Share Comparison

Intel has long been a dominant player in the semiconductor industry. The table below compares Intel’s market share to its competitors in 2020.

| Company | Market Share |

|---|---|

| Intel | 68.8% |

| AMD | 20.1% |

| NVIDIA | 8.1% |

| ARM | 3% |

Hugging Face’s Funding Rounds

Hugging Face has successfully raised funds to support its growth and development. The table below summarizes the funding rounds and amounts secured by the company.

| Round | Funding Amount (in millions) |

|---|---|

| Seed | 5 |

| Series A | 25 |

| Series B | 35 |

Intel’s Research and Development Expenses (in billions of dollars)

Intel dedicates a significant portion of its resources to research and development (R&D) to drive innovation. The table below showcases Intel’s R&D expenses over the years.

| Year | R&D Expenses |

|---|---|

| 2015 | 12.11 |

| 2016 | 13.19 |

| 2017 | 13.35 |

| 2018 | 13.61 |

| 2019 | 14.55 |

| 2020 | 13.95 |

Hugging Face’s Community Contributions

Hugging Face has a vibrant community of contributors, actively engaged in improving its offerings. The table below highlights the number of contributions made by the community.

| Year | Number of Contributions |

|---|---|

| 2018 | 2,500 |

| 2019 | 6,000 |

| 2020 | 12,500 |

| 2021 | 18,000 |

Intel’s Employees by Region

Intel employs a diverse workforce across different regions globally. The table below provides an overview of Intel’s employee distribution by region.

| Region | Number of Employees |

|---|---|

| North America | 50,000 |

| Europe | 25,000 |

| Asia-Pacific | 80,000 |

| Latin America | 10,000 |

Hugging Face’s GitHub Stars

Hugging Face’s open-source projects on GitHub have gained significant popularity. The table below lists the number of GitHub stars for some of Hugging Face’s repositories.

| Repository | Number of GitHub Stars |

|---|---|

| transformers | 40,000 |

| datasets | 25,000 |

| tokenizers | 12,000 |

In conclusion, the collaboration between Hugging Face and Intel has fostered significant growth and innovation. Hugging Face‘s user base has expanded each year, while Intel has witnessed substantial revenue growth and market dominance. With a rich model library, active community contributions, and successful funding rounds, Hugging Face has solidified its position as a leading platform for natural language processing. Meanwhile, Intel’s focus on research and development, along with its global workforce, has fueled its ongoing success in the semiconductor industry. Together, these companies exemplify the power of partnership and dedication to driving technological advancements.

Frequently Asked Questions

Hugging Face and Intel

What is Hugging Face?

Hugging Face is an organization that focuses on Natural Language Processing (NLP) and provides various tools and libraries to work with transformers, such as the popular library called Transformers.

What is Intel’s involvement with Hugging Face?

Intel has partnered with Hugging Face to optimize and accelerate Hugging Face‘s transformer models using Intel’s deep learning optimizations and hardware capabilities, resulting in improved performance and efficiency.

Why is the collaboration between Hugging Face and Intel significant?

The collaboration between Hugging Face and Intel brings together the expertise of both organizations, enabling the development of faster and more efficient transformer models. This collaboration helps researchers, developers, and data scientists to leverage the latest advancements in NLP more effectively.

What are the benefits of using Hugging Face and Intel’s optimized transformers?

Using Hugging Face and Intel’s optimized transformers provides several benefits, including enhanced performance, reduced inference time, improved resource utilization, and the ability to work with larger transformer-based models on constrained hardware.

Can Hugging Face and Intel’s transformers be used for specific NLP tasks?

Yes, Hugging Face and Intel’s transformers can be used for a wide range of NLP tasks such as text classification, sentiment analysis, named entity recognition, question answering, machine translation, and more. The modular nature of the transformers allows for easy adaptation to different tasks.

How can I get started with Hugging Face and Intel’s optimized transformers?

To get started, you can visit the Hugging Face website and explore the Transformers library. Additionally, you can look for Intel’s optimized versions of specific transformer models, which are often mentioned in the documentation or released as separate packages.

Are Hugging Face and Intel’s optimized transformers compatible with existing NLP libraries?

Yes, Hugging Face and Intel’s optimized transformers are designed to be compatible with popular NLP libraries such as PyTorch and TensorFlow. You can seamlessly integrate these optimized transformers into your existing NLP workflows.

Are there any performance benchmarks available for Hugging Face and Intel’s optimized transformers?

Yes, both Hugging Face and Intel often release performance benchmarks for their optimized transformers. You can refer to the official documentation or respective websites for the latest benchmark results.

Can I contribute to the development of Hugging Face and Intel’s optimized transformers?

Yes, both Hugging Face and Intel have open-source projects, and contributions from the community are welcome. You can participate in discussions, report issues, suggest improvements, and even contribute code to help enhance and expand the capabilities of the optimized transformers.

Where can I find more information about Hugging Face and Intel’s collaboration?

You can find more information about the collaboration between Hugging Face and Intel by visiting their respective websites, exploring their documentation, and following their official blogs and social media channels.